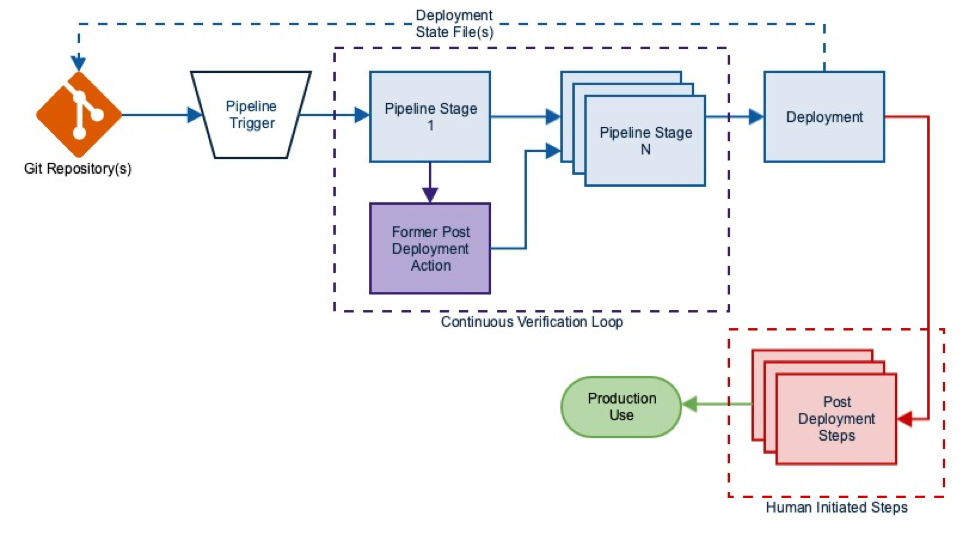

Managing deployments into the public cloud requires a strong set of guardrails to ensure low cost, efficient utilization, highly secure and efficient use of resources. With the constant change in features and capabilities on the hyperscalers (AWS/Azure/GCP) the CI/CD becomes the main control point to manages these guardrails. We discussed how to “shift left” day 2 operations into CI/CD using “Continuous Verification” in a previous blog

In any public cloud operations process there are multiple pipelines managing different aspects of the the environment.

-

Infrastructure pipelines – these are used to deploy environments into AWS for developers or production workloads. Building these generally uses terraform or some other infra-as-code tool (like AWS Cloud Formation templates). These scripts are executed in the pipeline, and generally before the app is deployed or the developer gets access to the environment.

-

Application pipelines – Each LOB, project, etc has individual application pipelines they are managing to deploy development or production workloads. The endpoints are generally set up by the infrastructure pipelines. These two types of pipelines are constantly in motion. Managing the outcomes from these pipelines is important. Continuous Verification helps you manage these pipelines by providing a mechanism to non-intrusively provide a set of checks and balances in the pipeline.

In this article we will review how you can implement Continuous Verification during an application deployment pipeline onto AWS EC2.

We will use Cloudbees running on GKE with checks into CloudHealth and VMware Secure State.

Implementing Continuous Verification with Cloudbees

The implementation of Continuous Verification into an application deployment pipeline is straight forward. In this implementation we will use:

-

terraform to deploy the application into AWS

-

Cloudhealth by VMware to check the budget before deployment

-

VMware Secure State to check the security posture post deployment

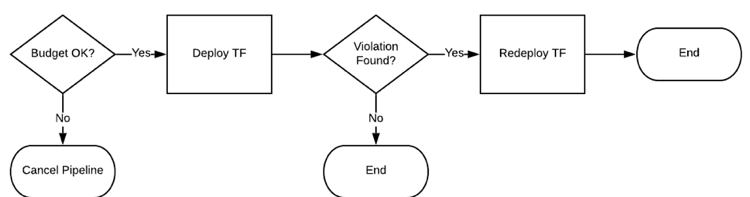

Here is the pipeline:

Each one of the verification stages uses logic that is run via a Python script being executed via the Jenkinsfile.

By using either Python based scripts or docker containers with these scripts, you can embed these checks into any pipeline.

The additional component that we will use is a “policy” file that defines the limitations of the checks. These “policy” files define the SLA that the deployment needs to meet.

The output of the logic checks our pipeline essentially determines if the pipeline proceeds or stops. However, there are additional steps that can be taken (not shown in this blog):

- A Jira ticket can be opened to have an Ops person alerted

- An approval process can be created to override the logic

- etc

Essentially you can add anything you need in any logic, any actions at any point in this pipeline. It’s all about ensuring post deployment remediation work is minimized.

Budget Check

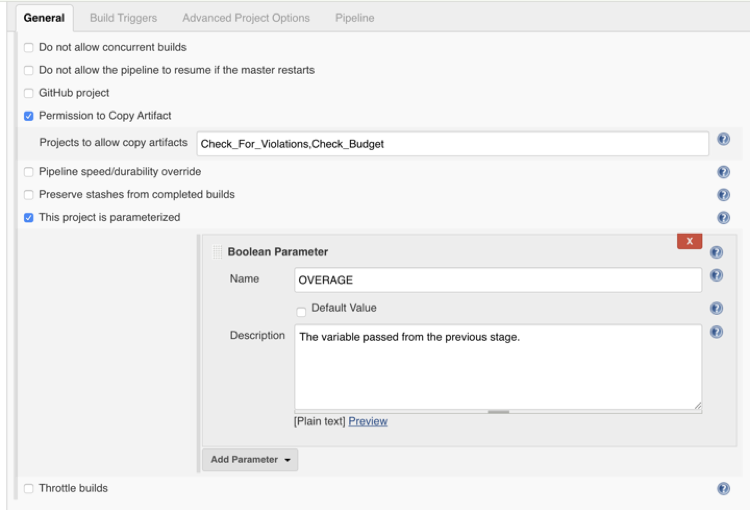

Budget check is done by making an API call into CloudHealth by VMware. We are checking how much money we have spent on this particular project this month. We’re checking that number against our known budget number. Depending on this outcome, we’re writing a boolean variable into Jenkins.

This allows us to pass variables between stages to either pass / fail the pipeline based on the Boolean parameter value set. In this instance, if we have an overage, then we do not want the deploy stage to run.

Cluster deployment

Next, we will use Terraform to deploy our application. This is as easy as running some code from our pipeline to install the Terraform dependencies and then running the commands to deploy the Terraform. This demo we have setup has 2 different sets of files. The initial Terraform deploy, which is flawed on purpose, and then the correct Terraform files. This allows us to simulate a security issue, then deploy the correct version. The Terraform files and repo can be found here.

Security Check

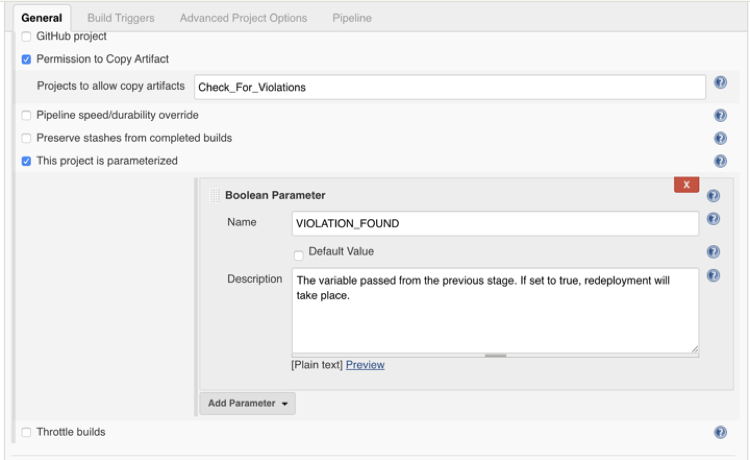

Now that the application has been deployed, we need to ensure the deployment is “clean”. Checking for violations is exactly like our budget stage, but it checks the previously deployed infrastructure for violations using VMware Secure State. This allows us to make sure that the infrastructure we deployed has the correct security measures in place. If this is not the case, then we want to fail the pipeline.

This is done in the same way by implementing another Boolean check into the re-deploy stage. This way we can check the variable that our python scripts wrote to verify if we should or should not continue operations in the pipeline.

Conclusion

As we’ve shown you, it’s fairly easy to add control points into the pipeline. Jenkins allows you to easily. While we showed budget (with Cloudhealth by VMware) and security (with VMware Secure State) you can add other bits into the pipeline such as:

- Checking the performance of the app in a staging environment before it proceeds to production

- Checking for AWS service authorization before the application gets deployed.

- Etc

Hopefully you find this framework useful to improve your operations process.