As noted in one of my earlier blogs, one of the key issues with managing Kubernetes is observability. Observability is the ability to gain insight into multiple data points/sets from the Kubernetes cluster and analyze this data in resolving issues.

As review, observability for the cluster and application covers three areas:

- Monitoring metrics — Pulling metrics from the cluster, through cAdvisor, metrics server, and/or prometheus, along with application data which can be aggregated across clusters in Wavefront by VMware.

- Logging data — Whether its cluster logs, or application log information like syslog, these data sets are important analysis.

- Tracing data — generally obtained with tools like zipkin, jaeger, etc. and provide detailed flow information about the application

In this blog we will explore how to send log data from the Kubernetes cluster, using a standard fluentbit daemonset, to an instance of AWS Elasticsearch.

There are two possible configurations of AWS Elasticsearch

- public configuration of AWS Elasticsearch

- secured configuration of AWS Elasticsearch

The basic installation of fluentbit on Kubernetes (Cloud PKS) with public configuration is available on my git repo:

https://github.com/bshetti/fluentbit-setup-vke

In this blog we will discuss how to configure fluentbit with a secure version of AWS Elasticsearch.

Component basics:

The following is a quick overview of the main components used in this blog: Kubernetes logging, Elasticsearch, and Fluentbit

Kubernetes Logging:

Log output, whether its system level or application based or cluster based is aggregated in the cluster and is managed by Kubernetes.

As noted in Kubernetes documentation:

- Application based logging —

Everything a containerized application writes to stdout and stderr is handled and redirected somewhere by a container engine. For example, the Docker container engine redirects those two streams to a logging driver, which is configured in Kubernetes to write to a file in json format.

- System logs —

There are two types of system components: those that run in a container and those that do not run in a container. For example:

The Kubernetes scheduler and kube-proxy run in a container.

The kubelet and container runtime, for example Docker, do not run in containers.

On machines with systemd, the kubelet and container runtime write to journald. If systemd is not present, they write to .log files in the /var/log directory. System components inside containers always write to the /var/log directory, bypassing the default logging mechanism.

Fluentbit:

As noted in the previous section, Kubernetes logs to stdout, stderr, or to /var/log directory on the cluster. The ability to pull these logs out of the cluster or aggregate the stdout logs requires the use of a node-level logging agent on each node. (see “logging-agent-pod” in the diagram in the Kubernetes Logging section).

This is generally a dedicated deployment for logging, and is usually deployed in a daemonset (in order to collect from all nodes). This dedicated agent will push these logs to some “logging backend” (output location).

Fluentbit is such a node-level logging agent and is generally deployed in a daemonset. More information on Fluentbit, please visit www.fluentbit.io.

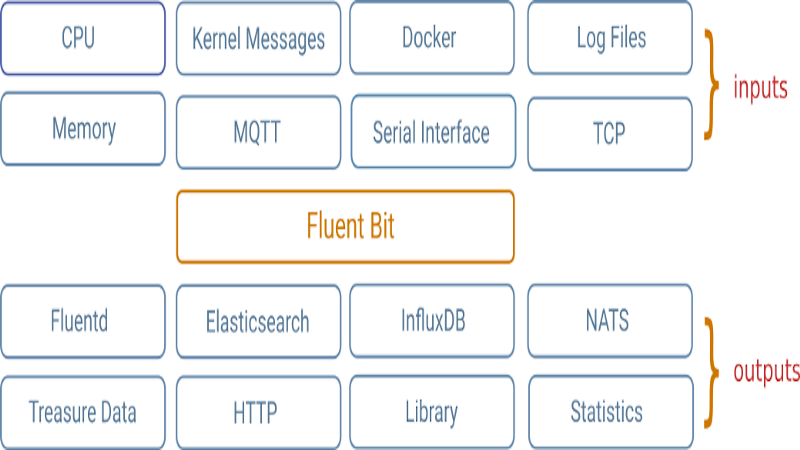

Fluentbit will pull log information from multiple locations on the kubernetes cluster and push it to one of many outputs.

In this blog we explore AWS Elasticsearch as one of those outputs

Elasticsearch:

Elasticsearch, is a search engine based on Lucene. It aggregates data from multiple locations, parses it, and indexes it, thus enabling the data to be searched. The input can be from anywhere and anything. Log aggregation is one of the multiple use cases for Elasticsearch. There is an opensource version and the commercial one from elastic.co.

AWS provides users with the ability to standup an elasticsearch “cluster” on EC2. AWS thus helps install, manage, scale, and monitor this cluster taking out the intricacies of operating elasticsearch.

Prerequisites

Before working through the configuration, the blog assumes the following:

- application logs are output to stdout from the containers — a great reference is found here in kubernetes documentation

- privilege access to install fluentbit daemonsets into “kube-system” namespace.

Privilege access may require different configurations on different platforms:

- KOPs — open source kubernetes installer and manager — if you are the installer then you will have admin access

- GKE — turn off the standard fluentd daemonset preinstalled in GKE cluster. Follow the instructions here.

- Cloud PKS (formerly VKE — VMware Kubernetes Engine) — Ensure you are running privilege clusters

This blog will use Cloud PKS which is a conformant kubernetes service.

Application and Kubernetes logs in Elasticsearch

Before we dive into the configuration, its important to understand what the output looks like.

I have configured my standard fitcycle application (as used in other blogs) with stdout. https://github.com/bshetti/container-fitcycle.

I’ve also configured fluentbit on Cloud PKS, and added a proxy (to access ES) to enable access to my ES cluster.

I have configured AWS Elasticsearch as a pubic deployment (vs VPC), but with Cognito configured for security.

AWS Elasticsearch Cognito login with user/password

AWS Elasticsearch displaying application log

AWS Elasticsearch displaying system level log

As you can see above, AWS Elasticsearch provides me with a rich interface to review and analyze the logs for both application and system.

Configuring and deploying fluentbit for AWS Elasticsearch

Fleuntbit configuration being used comes from the following standard Fluentbit github repository.

I have modified it and pushed the mods to https://github.com/bshetti/fluentbit-setup-vke

Setting up and configuring AWS Elasticsearch

The first step is properly configuring AWS Elasticsearch.

Configure AWS Elasticsearch as public access but with Cognito Authentication

This eliminates which VPC you specify the Elasticsearch cluster on. You can use the VPC configuration. I just choose not to for simplicity.

Configure authentication with Cognito

Once setup, you need to follow the steps from AWS to set up your ES policy, IAM roles, user pools, and users.

https://docs.aws.amazon.com/elasticsearch-service/latest/developerguide/es-cognito-auth.html

Setup user with policy and obtain keys

Once Elasticsearch is setup with Cognito, your cluster is secure. In order for fluentbit configuration to access elasticsearch, you need to create a user that has elasticsearch access privileges and obtain a the Access Key ID and Secret Access Key for that user.

The policy to assign the user is AmazonESCognitoAccess. (This is setup by Cognito).

Deploying fluentbit with es-proxy

This section uses the instructions outlined in:

https://github.com/bshetti/fluentbit-setup-vke

Specifically the “Elasticsearch in secure mode” part of the READ.me

Now that you have successfully set up elasticsearch on AWS, we will deploy fluentbit with an elasticsearch proxy.

Fluentbit does not support AWS authentication, and even with Cognito turned on, access to the elasticsearch indices is restricted to use of AWS authentication (i.e. key pairs). Keypairs etc are not supported yet (at the time of writing this blog) in fluentbit.

Hence we must front end fluentbit with an elasticsearch proxy that has the AWS authentication built in.

I’ve developed a kubernetes deployment for the following open source aws-es-proxy.

https://github.com/abutaha/aws-es-proxy

My aws-es-proxy kubernetes deployment files are located here:

https://github.com/bshetti/fluentbit-setup-vke/tree/master/output/es-proxy

- First step is to configure the Kubernetes cluster for fluentbit

Fluent Bit must be deployed as a DaemonSet, so on that way it will be available on every node of your Kubernetes cluster. To get started run the following commands to create the namespace, service account and role setup:

$ kubectl create namespace logging

$ kubectl create -f fluent-bit-service-account.yaml

$ kubectl create -f fluent-bit-role.yaml

$ kubectl create -f fluent-bit-role-binding.yaml

- Next step is to modify and deploy the fluentbit configuration mapping.

Modify /output/es-proxy/fluent-bit-configmap.yaml with the following change:

output-elasticsearch.conf: |

[OUTPUT]

Name es

Match *

Host ${FLUENT_ELASTICSEARCH_HOST}

Port ${FLUENT_ELASTICSEARCH_PORT}

Logstash_Format On

Retry_Limit False

tls Off <---- must be configured to Off (On is default)

tls.verify Off

Next create the configmap (will deploy in the logging namespace)

kubectl create -f ./output/elasticsearch/fluent-bit-configmap.yaml

- Last step - Run the es-proxy

Change the following parameters in the ./output/es-proxy/es-proxy-deployment.yaml file with your parameters (from setting up the user in AWS with ES access)

- name: AWS_ACCESS_KEY_ID

value: "YOURAWSACCESSKEY"

- name: AWS_SECRET_ACCESS_KEY

value: "YOURAWSSECRETACCESSKEY"

- name: ES_ENDPOINT

value: "YOURESENDPOINT"

Now run:

kubectl create -f ./output/es-proxy/es-proxy-deployment.yaml

kubectl create -f ./output/es-proxy/es-proxy-service.yaml

This will now have es-proxy service running on port 9200 in the logging namespace

- Now run the fluentbit daemon set

Simply deploy the following file:

kubectl create -f ./output/elasticsearch/fluent-bit-ds-with-proxy.yaml