Deploying applications into the cloud is the norm. Majority of these applications are landing on AWS, GCP or Azure. In addition, more and more of these applications are also using containers and utilizing Kubernetes.

Kubernetes is becoming more mainstream and the “mainstay” in many organizations. Adoption is growing, as are the number of options for Kubernetes and multiple methods to help build and manage them.

There are many Kubernetes choices to deploy your containerized application:

- Custom deployment solutions - from VMware Essential PKS, Kubespray, VMware Enterprise PKS, Stackpoint, etc.

- Turnkey solutions - AWS EKS, Azure AKS, GKE on GCP or on-prem, VMware Cloud PKS, etc.

Depending on your company’s needs, either one or both of these options maybe used. There is no right or wrong option. Turnkey options are, obviously the easier of the two options because they provide:

- Pre-built and managed clusters

- semi or fully automated cluster scaling

- well integrated security

- generally fully Kubernetes Conformant and hence eco-system friendly

- useable endpoints for common CI/CD tools like Jenkins, Spinnaker, etc

- Simple and easy update/upgrade options

But custom deployments give you unfettered control and customizations. In addition, there are lots of opensource tools to enable you to get an environment similar to a Turnkey solutions (i.e. Hashicorp Vault (key management), Heptio’s Velero (backup), etc.)

Given the trend to public cloud and the bulk of users on AWS, I will focus on what it takes to deploy and manage an application on AWS EKS. A common end point for deploying Kubernetes applications on AWS.

In a previous blog (Simplifying EKS Deployments and Management I focused on EKSCTL as a nice alternative to using the console or using cloud formation templates. In this blog I will look at an alternative - the use of Pulumi I’ll walk you through how to use Pulumi to easily get a EKS cluster running with Python.

There are other blogs that also work through Golang such as (Create and EKS Cluster with Pulumi and GoLang)

Prerequisites

In order to properly walk through this setup you will need to ensure

- python 3.X is installed

- AWS CLI installed and configured with the proper keys

- pulumi is installed for AWS using python - Follow these instruction in the Pulumi docs Follow up to and create a new project

- Ensure kubectl 1.14+ is also installed - enabling you to access the cluster

Pulumi set up

If you followed the setup from the Pulumi instructions you should have created a new project and you should have a folder the following files:

- Pulumi.dev.yaml - defines any configuration values for the project

- Pulumi.yaml - defines the project

- main.py - where some sample code resides. In the Pulumi example, they use S3.

You can go ahead and try to bring up a stack etc. However I will walk through the Python code that is useable for bringing up a properly configured EKS cluster.

Python EKS Configuration.

The best baseline code to utilize for bringing up the EKS cluster is found in Pulumi’s git example - Pulumi EKS Example

Three main files you should copy into your newly created project in the previous set are:

- main.py - Builds the EKS cluster

- iam.py - Helps define the proper roles needed in setting up EKS Clusters

- vpc.py - this code defines vpc and subnet components for the EKS Cluster

- requirements.txt - the required python libraries needed

With this configuration you can continue to move forward and essentially build an EKS cluster.

JUST REMEMBER TO RUN THIS IN A VIRTUAL ENV

However, these files are just a baseline. You will need to modify this to properly ensure you get the bits you need.

One example:

Running these files as is will provide you with a auto-generated EKS Cluster-name, such as eks-cluster-89e097d

However, if you wanted to use specific names in the deployment, then you need to modify the Pulumi.dev.yaml file with config variables.

For instance:

config:

aws:region: us-east-2

eks-test:cluster-name: myClusterName

I’ve added eks-test:cluster-name: myClusterName into the Pulumi.dev.yaml file. This variable needs to next be accessed in the python code.

I will add the following lines to __main__.py:

config = pulumi.Config()name=str(config.require('cluster-name')),

Here is the modified code:

import iam

import vpc

import pulumi

from pulumi_aws import eks

config = pulumi.Config()

## EKS Cluster

eks_cluster = eks.Cluster(

'eks-cluster',

name=str(config.require('cluster-name')),

role_arn=iam.eks_role.arn,

tags= {'Name':'pulumi-eks-cluster'},

vpc_config = {

'publicAccessCidrs': ['0.0.0.0/0'],

'security_group_ids': [vpc.eks_security_group.id],

'subnet_ids': vpc.subnet_ids,

}

)

eks_node_group = eks.NodeGroup(

'eks-node-group',

cluster_name=eks_cluster.name,

node_group_name='pulumi-eks-nodegroup',

node_role_arn=iam.ec2_role.arn,

subnet_ids=vpc.subnet_ids,

tags={

'Name' : 'pulumi-cluster-nodeGroup'

},

scaling_config = {

'desired_size': 2,

'max_size': 2,

'min_size': 1,

},

)

pulumi.export('cluster-name', eks_cluster.name)

Likewise you can add more parameters into the Pulumi.dev.yaml file and then use them in __main__.py

Building the cluster

Once you have made modifications to the __main__.py file. You only need to:

pulumi up

That’s it!!!

Pulumi will use __main__.py to build the EKS cluster.

You should see the following output:

(venv) ubuntu@ip-172-31-35-91:~/pulumi$ pulumi up

Previewing update (dev):

Type Name Plan

+ pulumi:pulumi:Stack eks-test-dev create

+ ├─ aws:iam:Role ec2-nodegroup-iam-role create

+ ├─ aws:iam:Role eks-iam-role create

+ ├─ aws:ec2:Vpc eks-vpc create

+ ├─ aws:iam:RolePolicyAttachment eks-workernode-policy-attachment create

+ ├─ aws:iam:RolePolicyAttachment eks-cni-policy-attachment create

+ ├─ aws:iam:RolePolicyAttachment ec2-container-ro-policy-attachment create

+ ├─ aws:iam:RolePolicyAttachment eks-service-policy-attachment create

+ ├─ aws:iam:RolePolicyAttachment eks-cluster-policy-attachment create

+ ├─ aws:ec2:InternetGateway vpc-ig create

+ ├─ aws:ec2:RouteTable vpc-route-table create

+ ├─ aws:ec2:Subnet vpc-subnet-us-east-2a create

+ ├─ aws:ec2:Subnet vpc-subnet-us-east-2c create

+ ├─ aws:ec2:Subnet vpc-subnet-us-east-2b create

+ ├─ aws:ec2:SecurityGroup eks-cluster-sg create

+ ├─ aws:ec2:RouteTableAssociation vpc-route-table-assoc-us-east-2b create

+ ├─ aws:ec2:RouteTableAssociation vpc-route-table-assoc-us-east-2a create

+ ├─ aws:eks:Cluster eks-cluster create

+ ├─ aws:ec2:RouteTableAssociation vpc-route-table-assoc-us-east-2c create

+ └─ aws:eks:NodeGroup eks-node-group create

Resources:

+ 20 to create

Do you want to perform this update? yes

Updating (dev):

Type Name Status

+ pulumi:pulumi:Stack eks-test-dev created

+ ├─ aws:iam:Role ec2-nodegroup-iam-role created

+ ├─ aws:iam:Role eks-iam-role created

+ ├─ aws:ec2:Vpc eks-vpc created

+ ├─ aws:iam:RolePolicyAttachment eks-workernode-policy-attachment created

+ ├─ aws:iam:RolePolicyAttachment eks-cni-policy-attachment created

+ ├─ aws:iam:RolePolicyAttachment ec2-container-ro-policy-attachment created

+ ├─ aws:iam:RolePolicyAttachment eks-service-policy-attachment created

+ ├─ aws:iam:RolePolicyAttachment eks-cluster-policy-attachment created

+ ├─ aws:ec2:InternetGateway vpc-ig created

+ ├─ aws:ec2:Subnet vpc-subnet-us-east-2a created

+ ├─ aws:ec2:Subnet vpc-subnet-us-east-2c created

+ ├─ aws:ec2:SecurityGroup eks-cluster-sg created

+ ├─ aws:ec2:Subnet vpc-subnet-us-east-2b created

+ ├─ aws:ec2:RouteTable vpc-route-table created

+ ├─ aws:eks:Cluster eks-cluster created

+ ├─ aws:ec2:RouteTableAssociation vpc-route-table-assoc-us-east-2a created

+ ├─ aws:ec2:RouteTableAssociation vpc-route-table-assoc-us-east-2c created

+ ├─ aws:ec2:RouteTableAssociation vpc-route-table-assoc-us-east-2b created

+ └─ aws:eks:NodeGroup eks-node-group created

Outputs:

cluster-name: "myClusterName"

Resources:

+ 20 created

Duration: 12m0s

Permalink: https://app.pulumi.com/bshetti/eks-test/dev/updates/5

warning: A new version of Pulumi is available. To upgrade from version '1.13.0' to '1.13.1', run

$ curl -sSL https://get.pulumi.com | sh

or visit https://pulumi.com/docs/reference/install/ for manual instructions and release notes.

Notice how the cluster-name is set to myClusterName.

NOTE: cluster-name is different from resource-name.

What is Pulumi building?

Pulumi will bring up the following components for the EKS cluster.

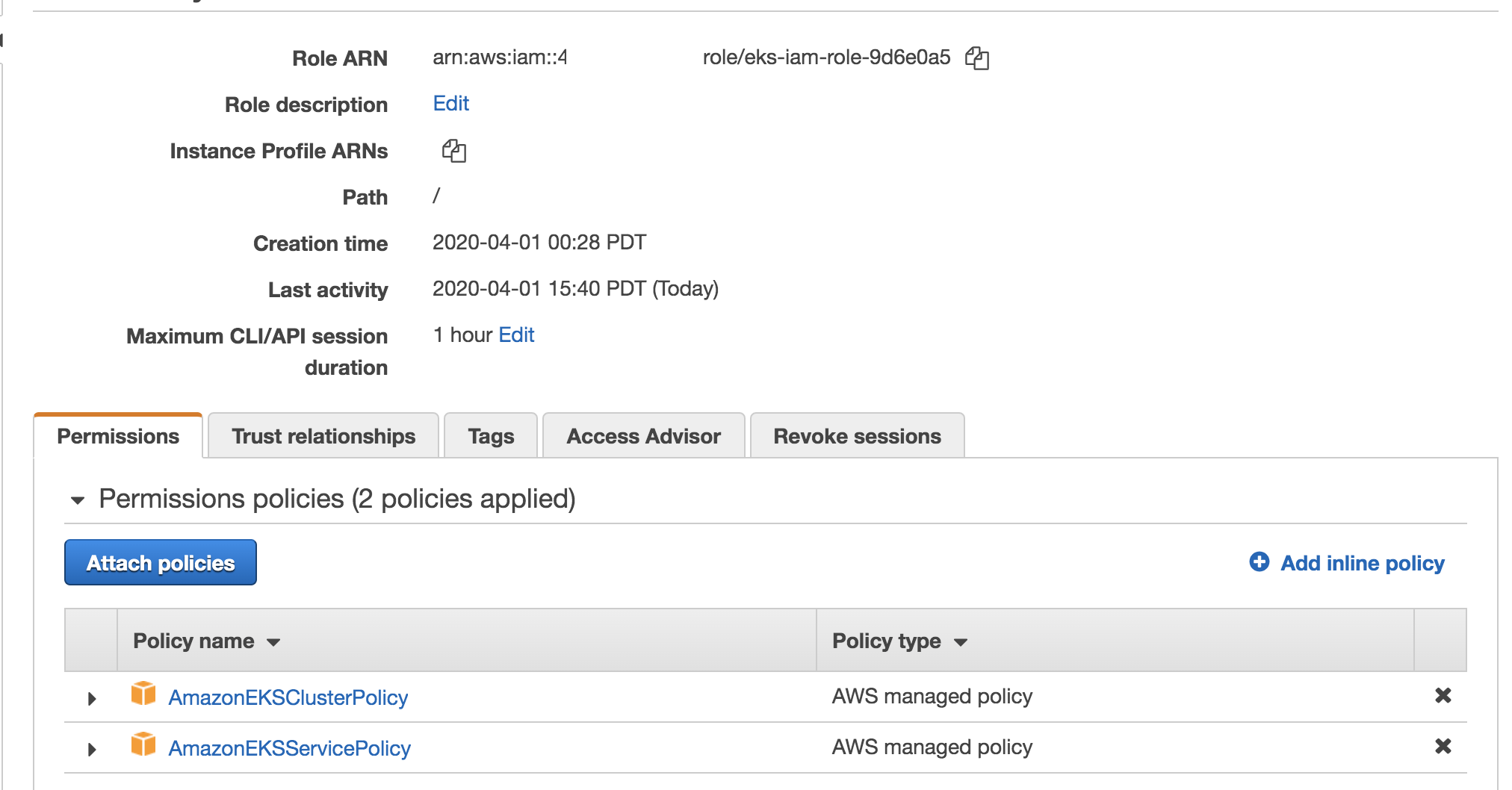

- Security Role to build the cluster and node groups using

iam.py- two necessary security policies are added to the role viaiam.pyfile:

AmazonEKSClusterPolicy

AmazonEKSServicePolicy

You should see something like this:

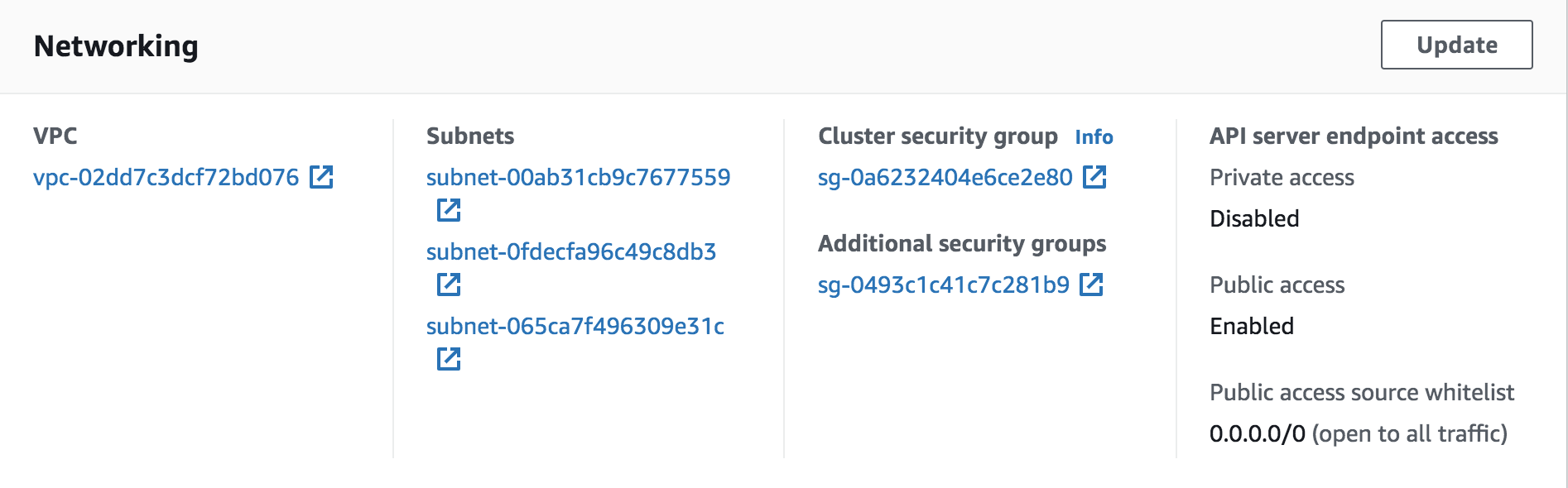

vpc.pyis used to setup the VPC and subnets roles EKS - 1 VPC and 3 subnets (across three AZs)

You should see something like this:

__main__.pyis used to configure and build the EKS cluster

Conclusion

Once you have a working Pulumi project and the python files listed above its easy to modify to the needs that satisfy your operations. These can be modified and managed in git as with any other code. But even developers can now control some of the deployments vs devOps engineers (if needed).

The simlicity of Pulumi is that once the code is set, the config file is only file that should truly change or need heavy modifications. And with a simple pulumi up your EKS cluster is up in minutes.

No more fussing with Cloudformation. Its a great alternative to EKSCTL in that it provides more variability and control. However for the simple quick and dirty EKS bring up, EKSCTL is truly very easy.